A Pattern Every Modern Developer Should Know: CQRS

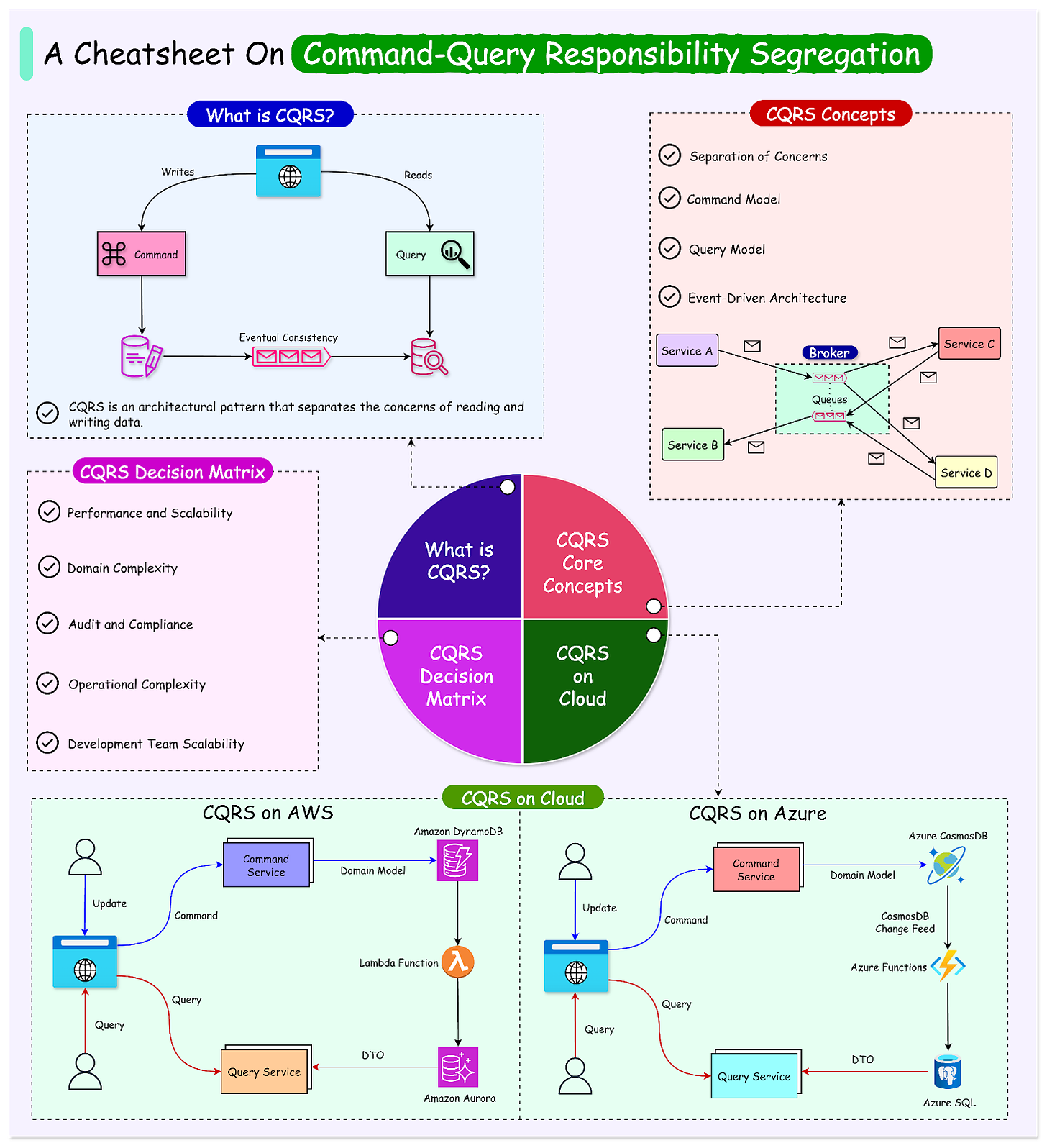

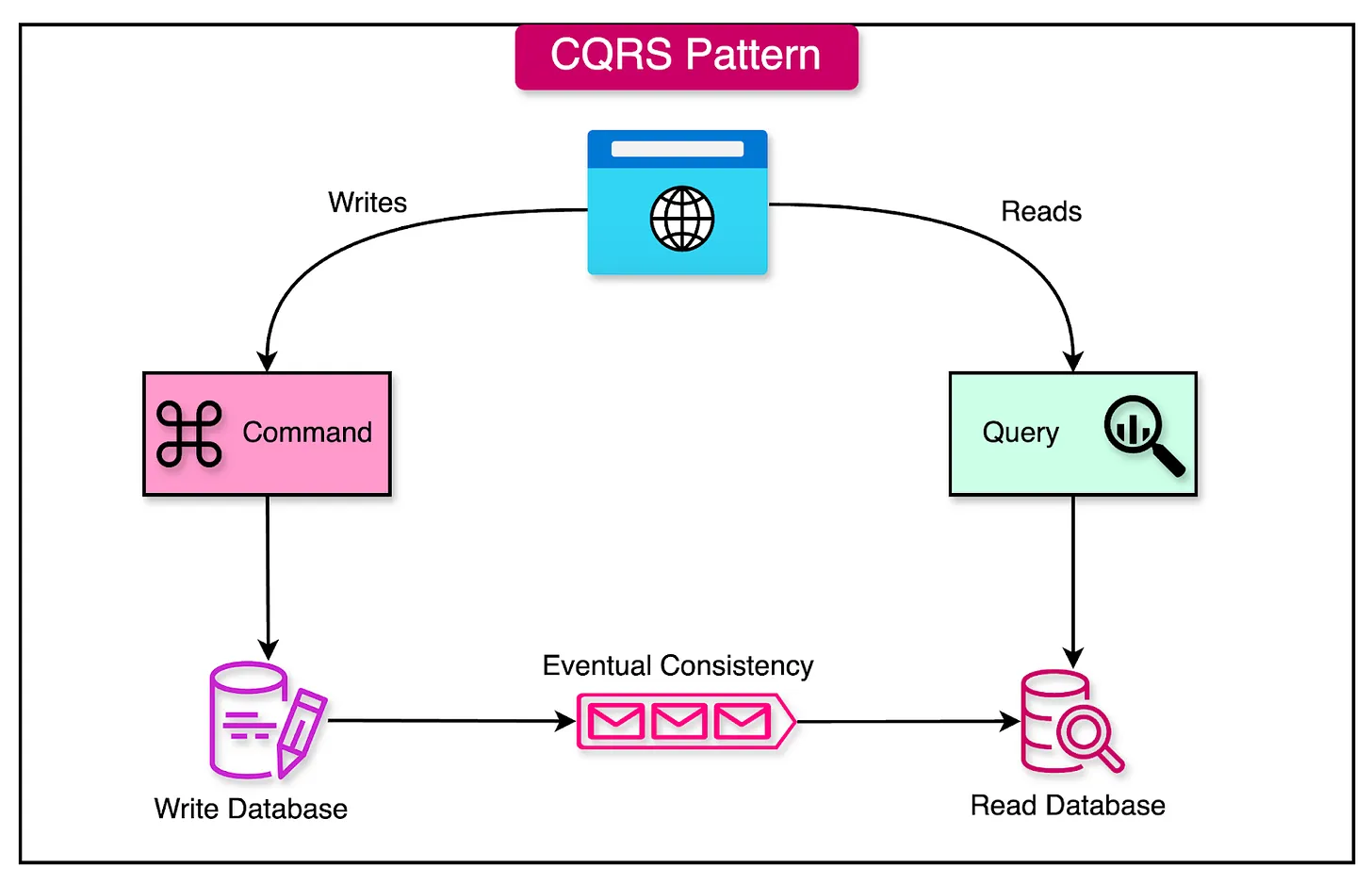

CQRS, which stands for Command Query Responsibility Segregation, is an architectural pattern that separates the concerns of reading and writing data.

It divides an application into two distinct parts:

- The Command Side: Responsible for managing create, update, and delete requests.

- The Query Side: Responsible for handling read requests.

The CQRS pattern was first introduced by Greg Young, a software developer and architect, in 2010. He described it as a way to separate the responsibility of handling commands (write operations) from handling queries (read operations) in a system.

The origins of CQRS can be traced back to the Command-Query Separation (CQS) principle, introduced by Bertrand Meyer. CQS states that every method should either be a command that performs an action or a query that returns data, but not both. CQRS takes the CQS principle further by applying it at an architectural level, separating the command and query responsibilities into different models, services, or even databases.

Since its introduction, CQRS has gained popularity in the software development community, particularly in the context of domain-driven design (DDD) and event-driven architectures.

It has been successfully applied in various domains, such as e-commerce, financial systems, and collaborative applications, where performance, scalability, and complexity are critical concerns.

In this post, we’ll learn about CQRS in comprehensive detail. We will cover the various aspects of the pattern along with a decision matrix on when to use it.

Core Concepts of CQRS

The overall CQRS pattern is made up of a few core concepts:

- Separation of Command and Query models

- Command Model

- Query Model

- Event-Driven Architecture

The diagram below shows a simple view of the CQRS pattern

To understand things better, let us look at each core concept in greater detail:

Separation of Command Model From Query Model

The fundamental principle of CQRS is the separation of the command model from the query model

- Command Model: Responsible for handling write operations and updating the system state.

- Query Model: Dedicated to providing efficient and flexible data access for read operations.

This separation enables each model to be optimized independently based on its specific requirements, enhancing the scalability, performance, and maintainability of complex systems.

Command Model (Write Operations)

The command model in CQRS handles all write operations, including creating, updating, and deleting data.

Key aspects of the command model include:

- Task-Based Operations: Commands are typically represented as task-based operations (e.g., “CreateOrder” or “UpdateCustomerAddress”) rather than generic CRUD operations.

- Command Handlers: Process commands by executing the corresponding business logic and persisting changes to the write database.

- Event Sourcing: The command model may incorporate event sourcing, where the system state is determined by a sequence of events, providing a complete audit trail and enabling powerful capabilities like event replay and temporal queries. More on this in a later section.

Query Model (Read Operations)

The query model in CQRS handles all read operations and provides efficient data access for querying and reporting purposes.

Key features of the query model include:

- Optimized Data Structures: These could be denormalized data structures or materialized views for fast and flexible data retrieval.

- Tailored Structure: The query model can have a different structure than the command model, specifically designed for the read scenarios required by the application.

- Data Aggregation: The query model may aggregate data from multiple sources, precompute complex calculations, or generate read-friendly data projections.

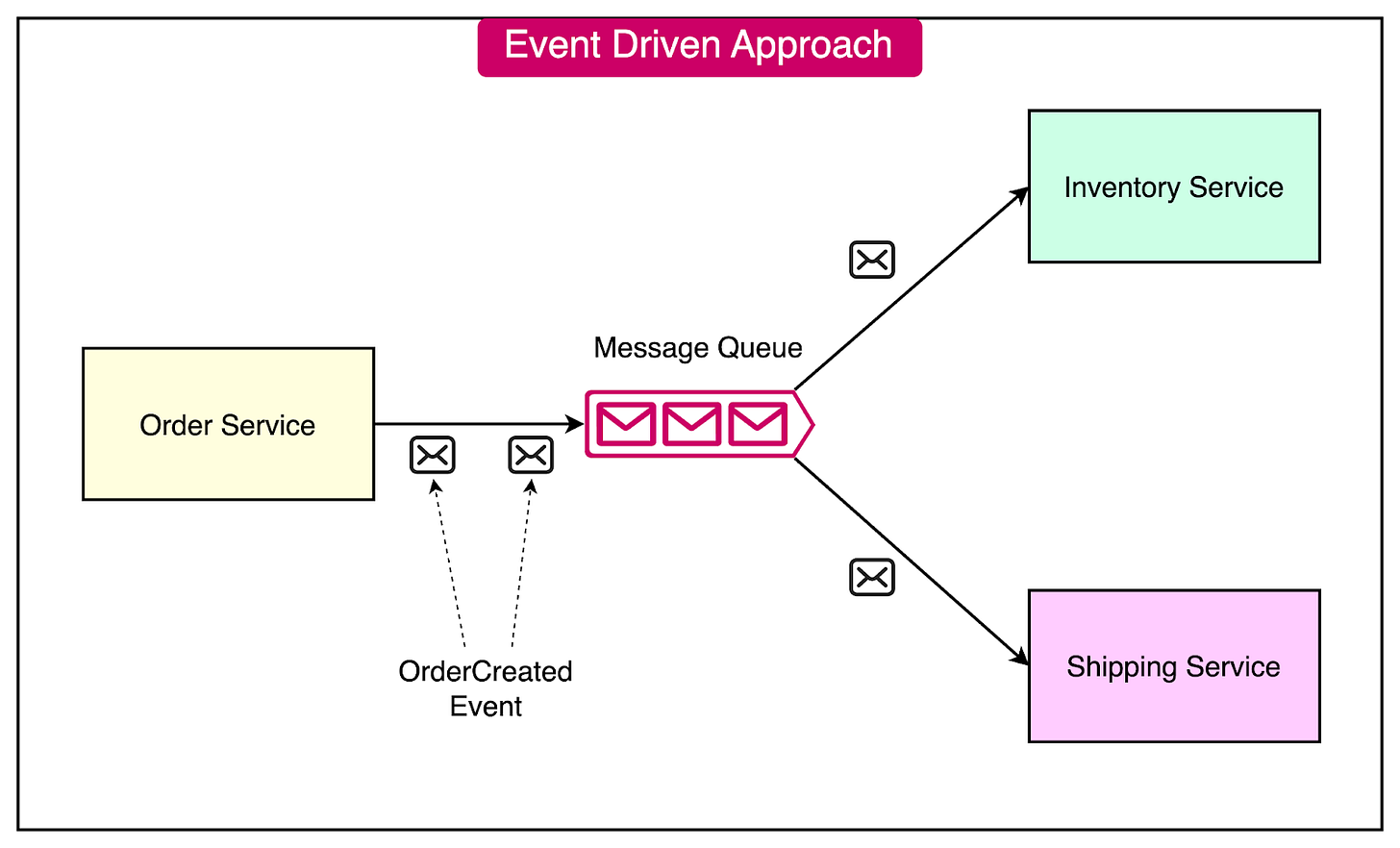

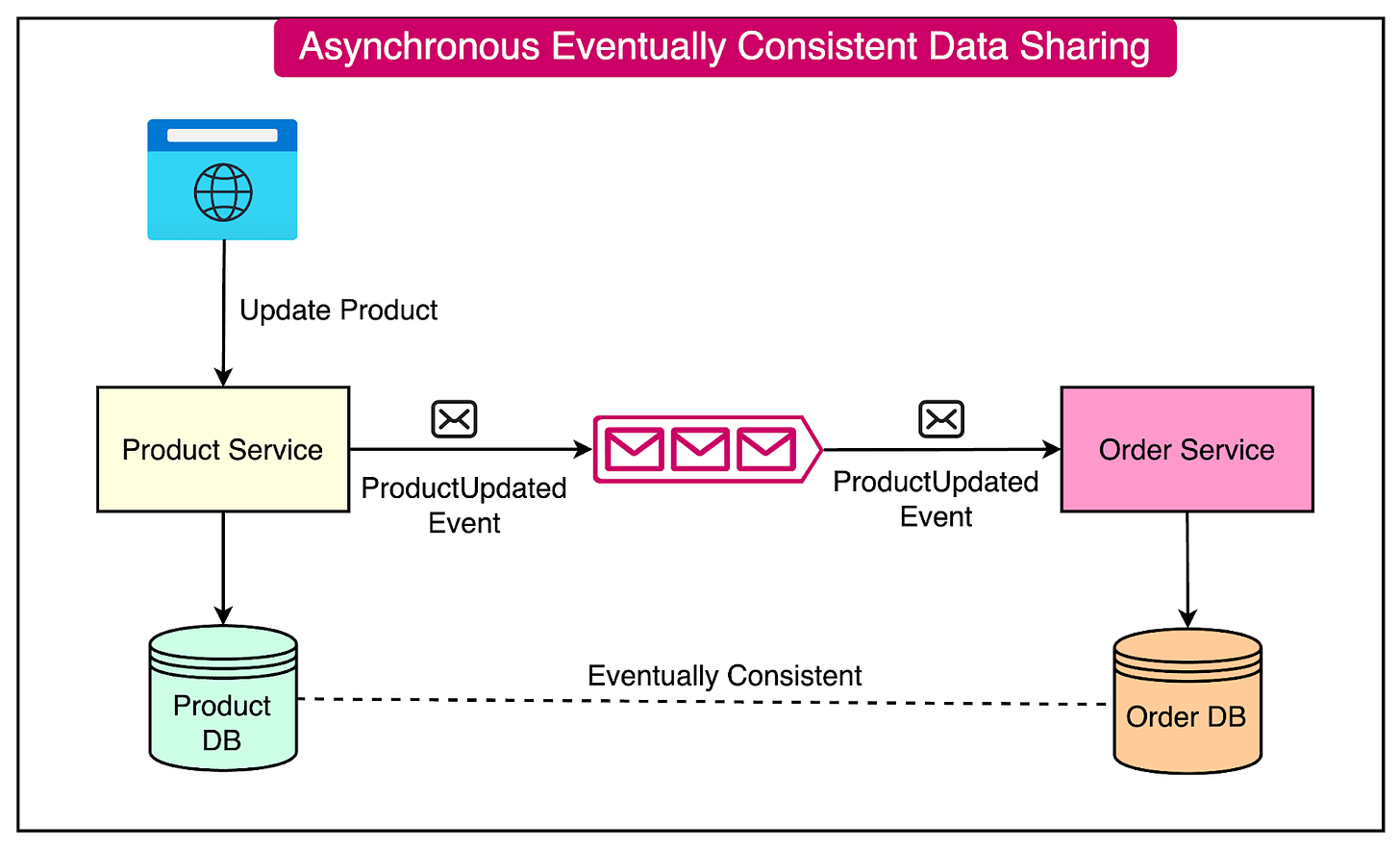

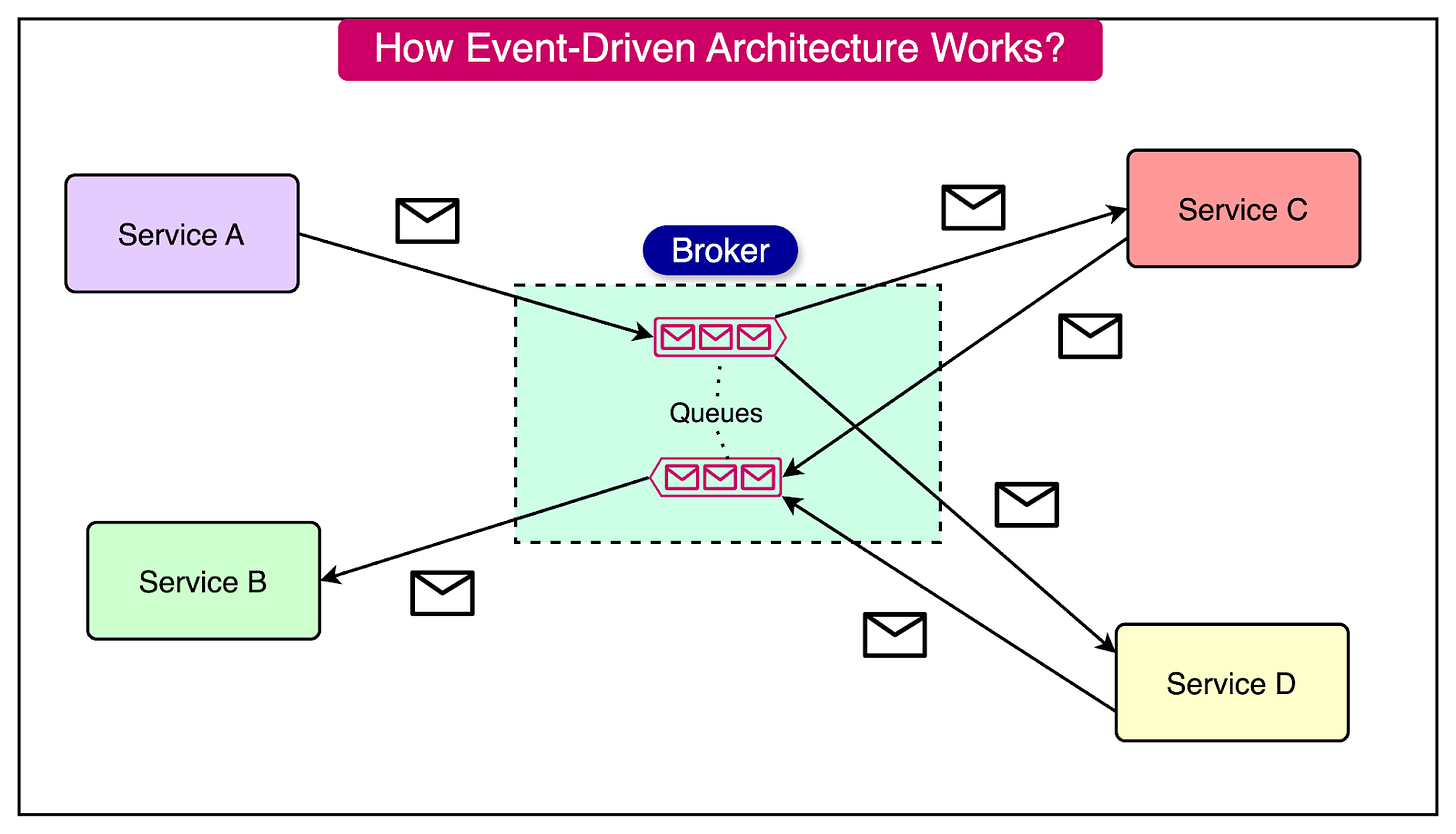

Event-Driven Architecture

CQRS often integrates with event-driven architectures, where changes in the system state are propagated as events.

This integration provides several benefits:

- Asynchronous Communication: When a command is processed and the write model is updated, events are generated to notify other parts of the system, including the query model.

- Eventual Consistency: The query model can subscribe to these events and update its data stores, ensuring eventual consistency between the command and query sides.

- Loose Coupling: Event-driven communication allows for loose coupling between models. This enables independent scaling and evolution.

- System Integration: Facilitates integration with other systems and enables complex business processes to be modeled as a series of events.

CQRS in Action

Let us understand more about CQRS using the e-commerce domain as an example.

E-commerce platforms often face challenges in managing product inventory, orders, and customer data due to the conflicting requirements of read or write operations.

Traditional e-commerce architectures that use a single data model for both read and write operations face several limitations:

- Performance Impact: Complex queries for product listings and recommendations can slow down the system and impact the performance of write operations like inventory updates and order processing.

- Scalability Challenges: Managing high traffic during peak shopping periods can be challenging due to resource contention between read and write operations on the same data model.

- Feature Development Complexity: Implementing new features that require changes to the data model can be difficult and may necessitate costly migrations.

Applying the Command Query Responsibility Segregation (CQRS) pattern can address these challenges by separating the read and write models.

Write (Command) Model

The command model works as follows:

- Responsibilities: Handles inventory updates, order placements, and customer data modifications.

- Optimization: Optimized for fast, low-latency write operations using a database like MongoDB or Cassandra.

- Event Publishing: Publishes events for each command, such as “ProductAdded”, “OrderPlaced”, or “CustomerAddressUpdated”.

Read (Query) Model

The query model works as follows:

- Responsibilities: Handles product listings, search queries, order history, and customer profile views.

- Optimization: Optimized for fast, complex read queries.

- Event Subscription: Subscribes to events from the write model and updates its data stores accordingly, ensuring eventual consistency.

Implementing CQRS

Implementing Command Query Responsibility Segregation (CQRS) in a system involves several architectural patterns that enhance the overall design and performance.

Two key patterns that often go hand in hand with CQRS are Event Sourcing and Microservices.

CQRS also encourages a task-based approach to user interface (UI) design, leading to more intuitive and efficient user experiences.

Let’s look at them in more detail:

Event Sourcing and Its Synergy with CQRS

Event Sourcing is an architectural pattern that involves storing the state of a system as a sequence of events rather than just the current state. This approach aligns well with CQRS, particularly on the command side.

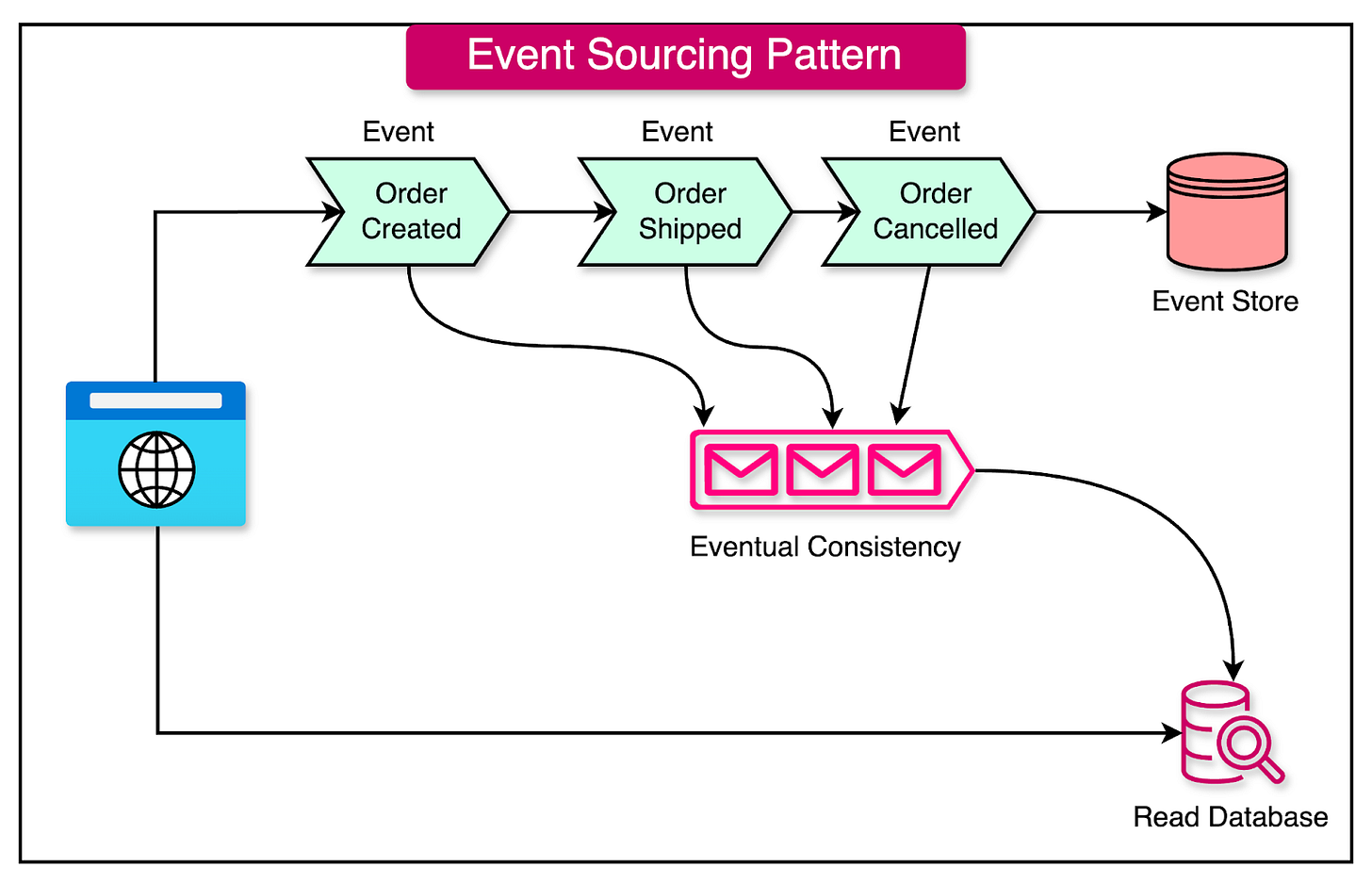

See the diagram below that shows the concept of event sourcing

Key aspects of this synergy include:

- Natural Fit with the Command Model: In CQRS, commands can be easily translated into events, which are then stored in an append-only event store. This natural fit simplifies the implementation of the command model.

- Audit Trail and Historical Reconstruction: Event Sourcing provides a complete history of state changes, enabling powerful auditing capabilities and the ability to reconstruct past states. This feature is valuable for systems that require strong auditing and compliance.

- Flexibility in Read Model Creation: The event stream generated by Event Sourcing can be used to build and rebuild various read models, allowing for greater flexibility in how data is presented and queried. This flexibility enables the system to adapt to changing requirements and optimize read performance.

- Scalability: CQRS and Event Sourcing support independent scaling of read and write operations, enhancing overall system performance. The separation of concerns allows for optimized resource allocation and improved responsiveness.

Task-Based UI Design

CQRS encourages a task-based approach to UI design, which can lead to more intuitive and efficient user interfaces:

- Command-Oriented Interactions: UIs can be designed around specific user tasks (commands) rather than CRUD operations on data entities. This approach aligns the UI more closely with the user's goals and intentions.

- Simplified Validation: Task-based UIs can incorporate client-side validation, reducing the likelihood of server-side command failures. By validating user input before sending commands to the server, the system can provide faster feedback and a smoother user experience.

- Alignment with Domain Model: Task-based UIs often align more closely with the underlying domain model, improving overall system coherence. By reflecting the domain concepts and operations in the UI, the system becomes more understandable and maintainable.

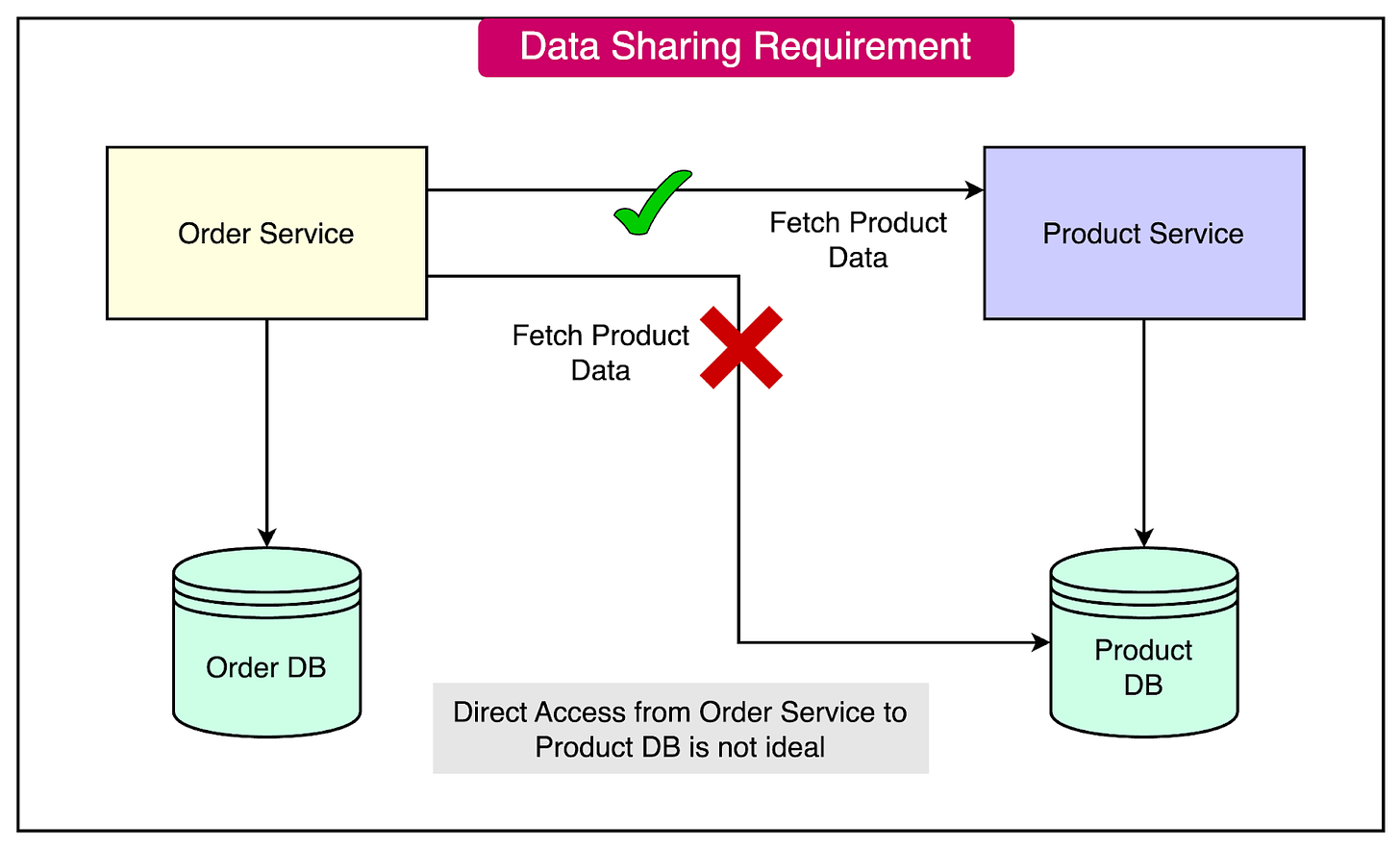

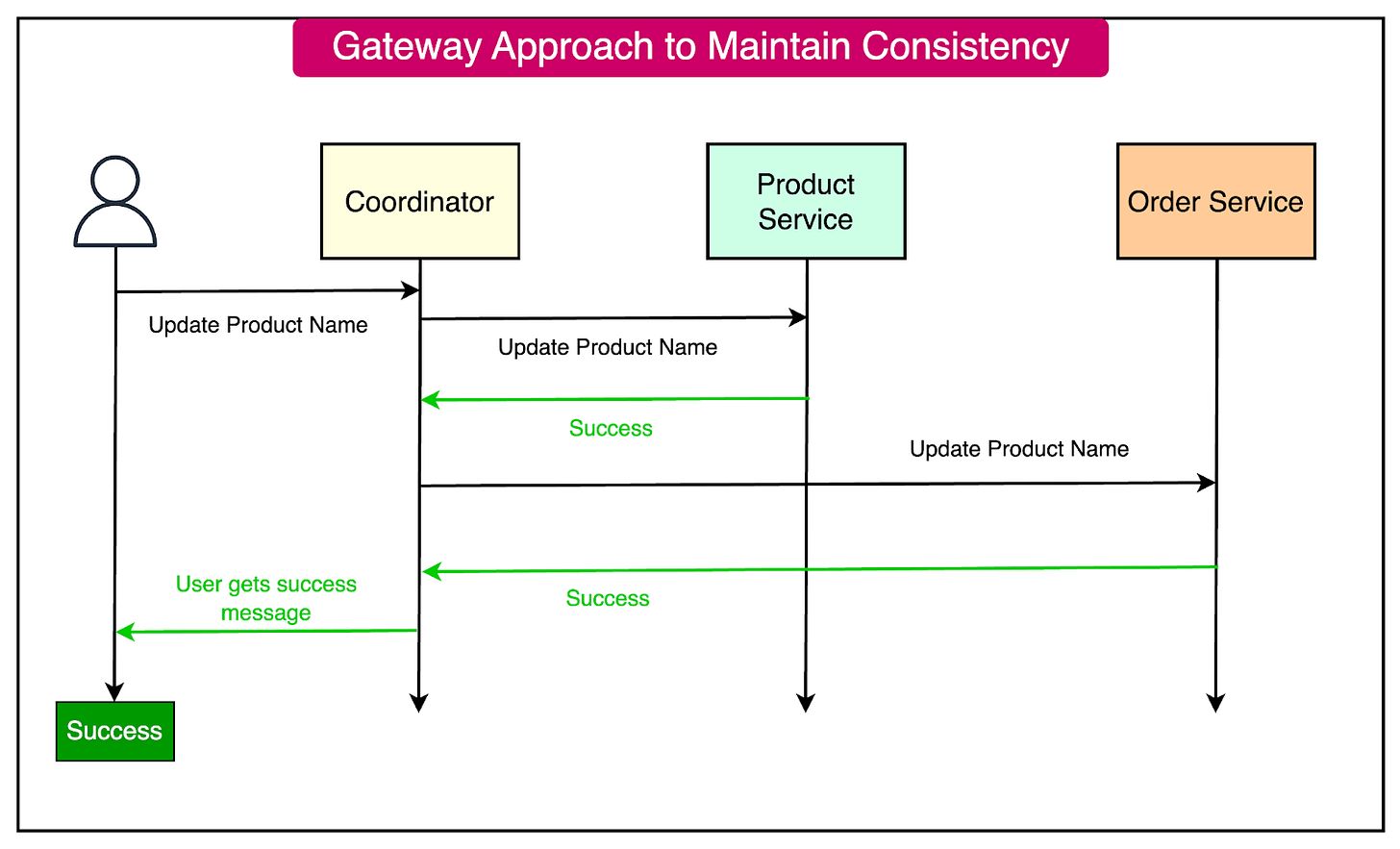

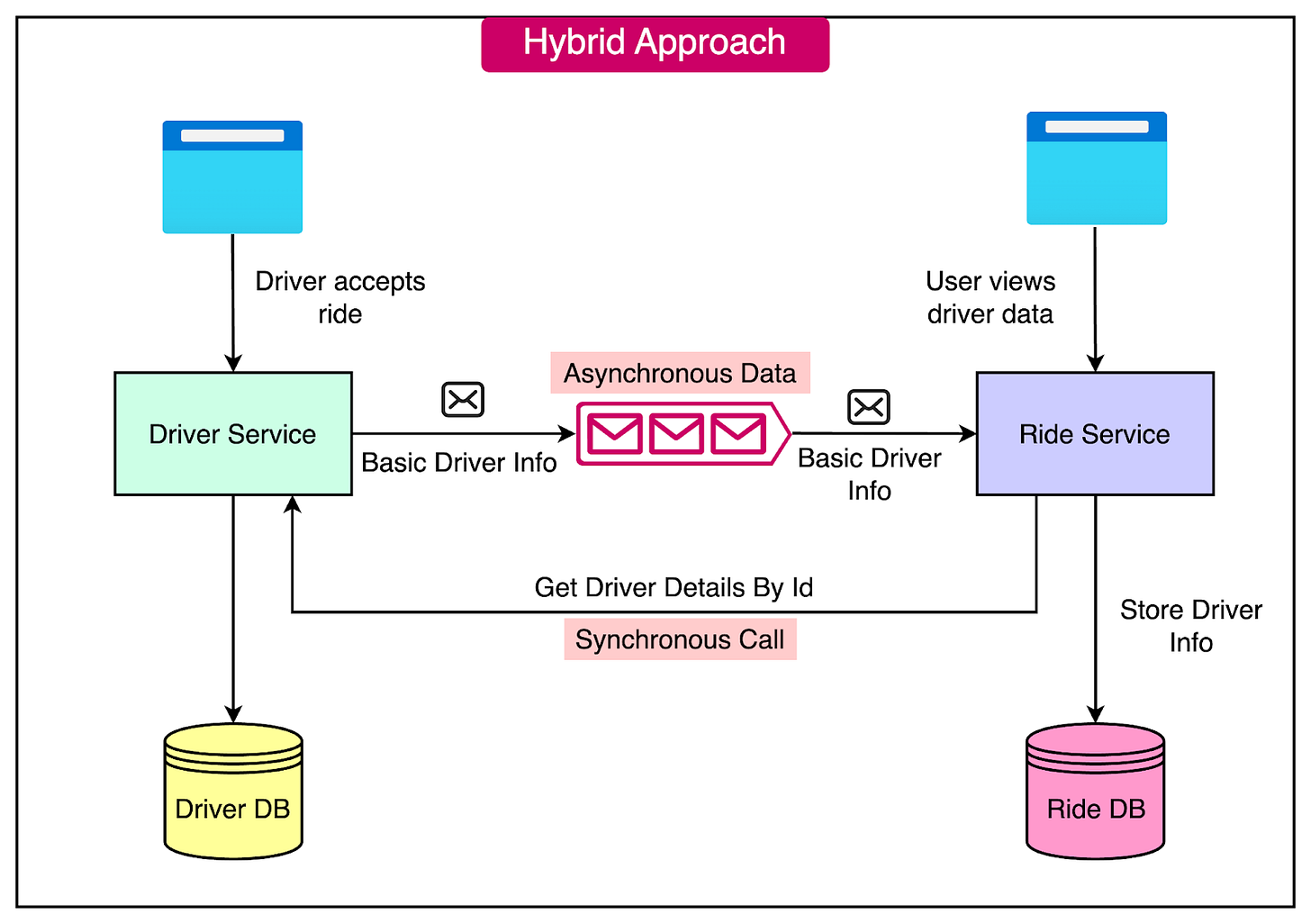

Microservices and CQRS

CQRS can be effectively implemented within a microservices architecture, offering several benefits:

- Bounded Contexts: CQRS aligns well with the concept of bounded contexts in Domain-Driven Design, which is often used in microservices architectures. Each microservice can have its bounded context, with its own command and query models.

- Independent Deployment: The separation of command and query models in CQRS allows for independent deployment and scaling of these components as separate microservices. This independence enables more flexible and targeted scaling based on the specific demands of each model.

- Polyglot Persistence: With CQRS, different microservices can use different data stores optimized for their specific read or write requirements. This polyglot persistence approach allows for choosing the most suitable database technology for each microservice.

- Event-Driven Communication: Microservices can communicate changes through events, which fits naturally with the CQRS model. Events can be used to propagate updates and maintain consistency across multiple microservices.

CQRS on Cloud Platforms

Cloud platforms like Amazon Web Services (AWS) and Microsoft Azure provide a range of services that facilitate the implementation of the Command Query Responsibility Segregation (CQRS) pattern.

Do note that it is not necessary to use cloud platforms to implement the pattern. However, these platforms offer scalable, managed services that align well with the principles of CQRS, enabling developers to build high-performance, resilient systems with reduced operational overhead.

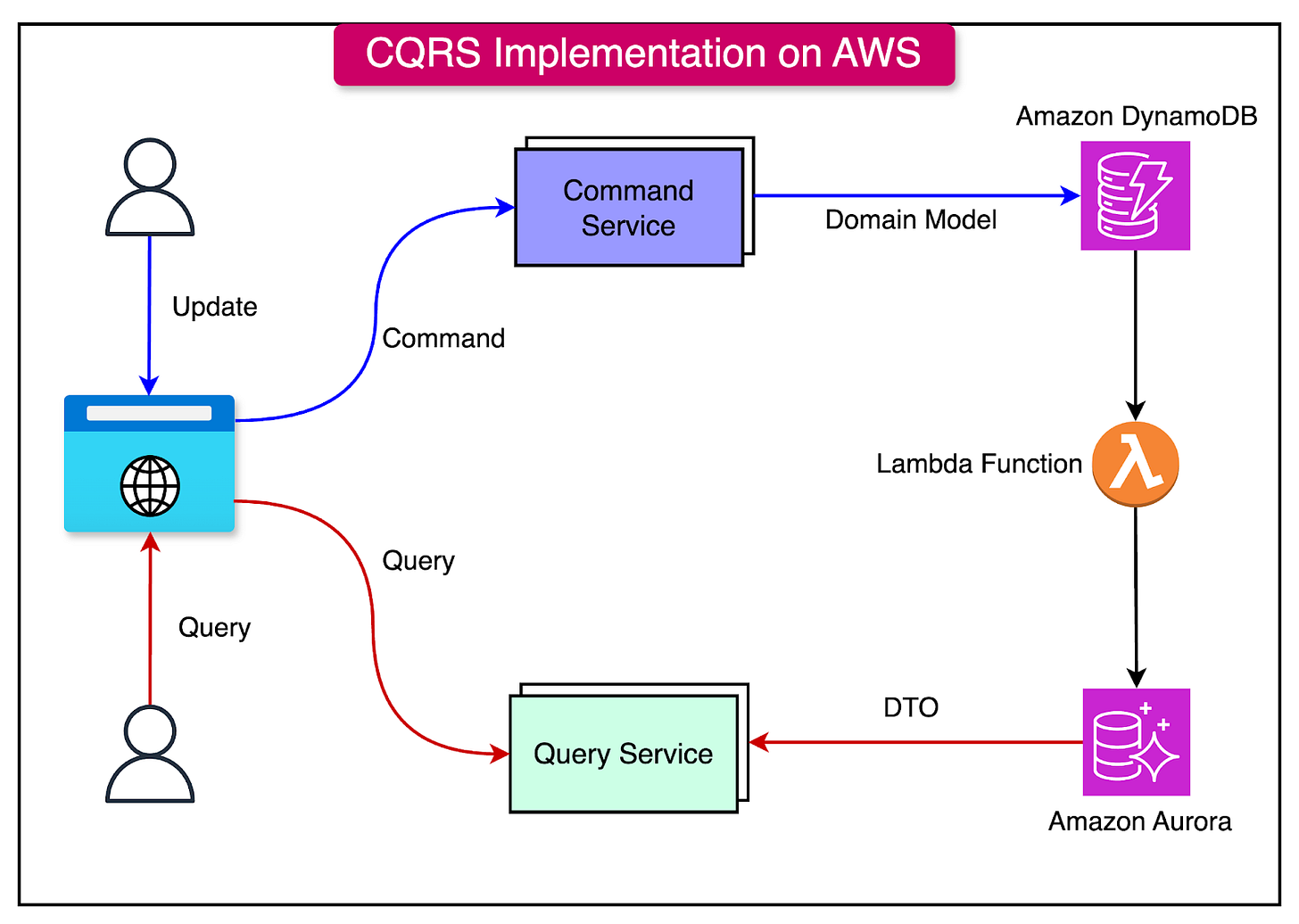

AWS Implementation (using DynamoDB and Aurora)

AWS provides several services that can be used to implement the CQRS pattern effectively.

A common approach is to use DynamoDB for the write model and Aurora for the read model. Some key points about the implementation are as follows:

- Write Model with DynamoDB: DynamoDB, a fully managed NoSQL database, is well-suited for the command side due to its ability to handle high-volume write operations with low latency and high throughput. Its flexible schema allows for easy storage of event data.

- Read Model with Aurora: For the query side, Amazon Aurora, a relational database compatible with MySQL and PostgreSQL, offers high read scalability and performance. It can handle complex queries and provide read-optimized views of the data.

- Synchronization with DynamoDB Streams: DynamoDB streams can be used to keep the read model in sync with the write model. Any updates to the write model in DynamoDB can trigger a Lambda function that processes the changes and updates the read model in Aurora accordingly, ensuring eventual consistency between the two models.

See the diagram below for reference:

The main benefits of this approach include:

- Scalability: DynamoDB and Aurora can scale independently based on the read-and-write workloads.

- Performance: DynamoDB optimizes writes, while Aurora optimizes complex reads.

- Serverless: Both services offer serverless options, enabling pay-per-use pricing.

- Managed Services: AWS handles the operational tasks, reducing the burden on the development team.

Azure Implementation

Azure also provides various services that enable the implementation of CQRS.

A typical approach involves using Azure Cosmos DB for the write model and Azure SQL Database for the read model.

- Write Model with Cosmos DB: Azure Cosmos DB, a globally distributed, multi-model database service, is suitable for the command side. It offers high write throughput, low latency, and automatic scaling. Its flexible data model allows for easy storage of events and aggregates.

- Read Model with SQL Database: For the query side, Azure SQL Database, a fully managed relational database service, provides high performance and scalability for read-heavy workloads. It supports complex queries and can be used to create read-optimized views of the data.

- Synchronization with Cosmos DB Change Feed: To propagate changes from the write model to the read model, Azure Functions can be triggered by the Cosmos DB change feed. These functions can process the events and update the read model in the Azure SQL Database, ensuring eventual consistency.

See the diagram below for reference:

The key benefits of this approach include:

- Global Distribution: Cosmos DB enables the global distribution of data, reducing latency for users worldwide.

- Scalability: Cosmos DB and SQL Database can scale independently based on workload requirements.

- Flexibility: Cosmos DB supports multiple data models, allowing for flexibility in event storage.

- Managed Services: Azure handles the management and operations of the databases, reducing overhead.

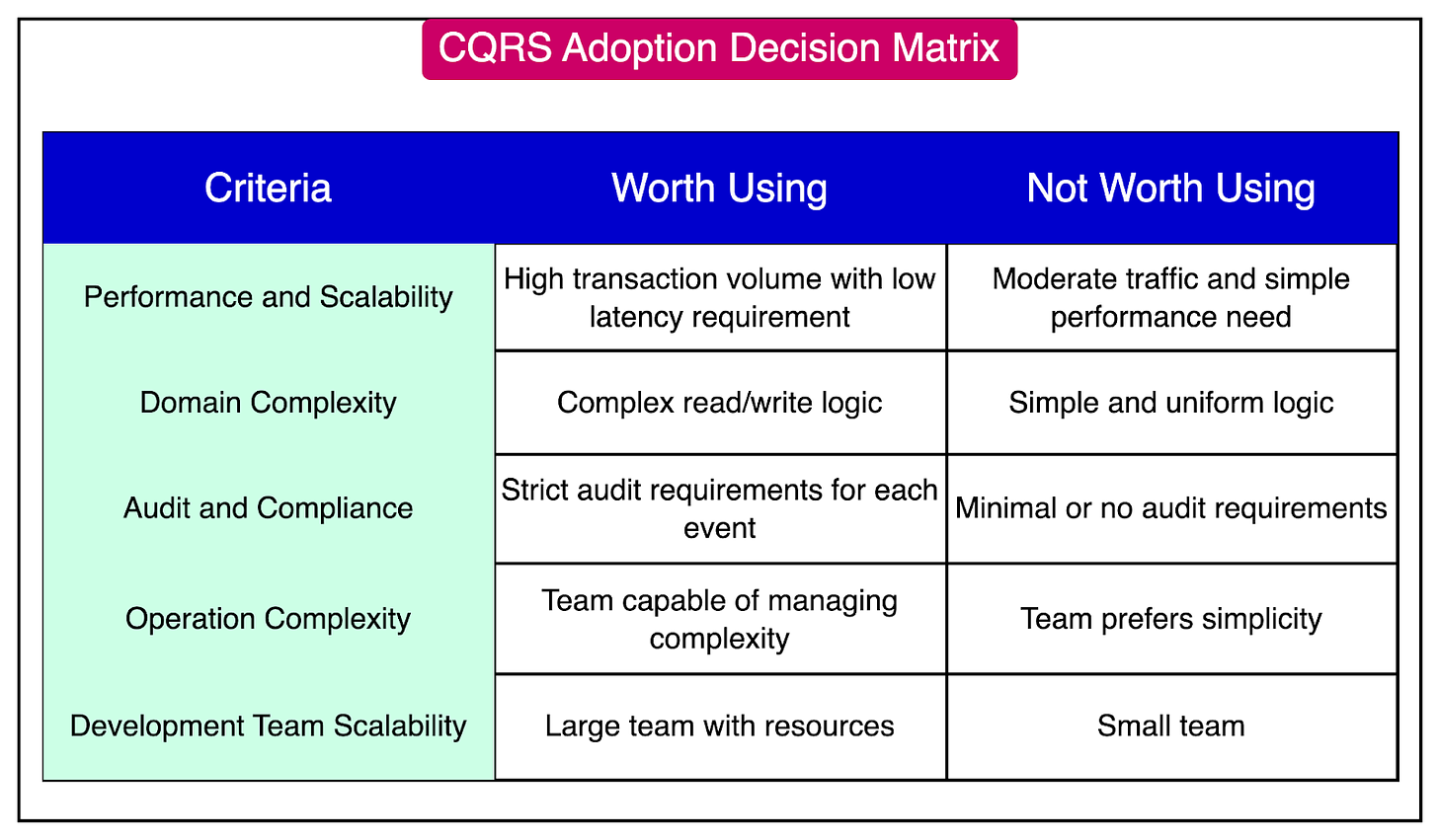

When to Use CQRS: A Decision Framework

Deciding whether to apply the Command Query Responsibility Segregation (CQRS) pattern to a system requires careful consideration of various factors.

This decision framework helps evaluate the suitability of CQRS based on the system's specific requirements and characteristics.

Performance and Scalability Needs

CQRS can be particularly beneficial for systems with high performance and scalability demands, especially when the read-and-write workloads have different requirements:

- High Read-to-Write Ratio: If the system experiences a significantly higher number of read operations compared to write operations, CQRS allows optimizing the read side independently, potentially using a separate read-optimized data store.

- Independent Scaling: CQRS enables scaling the read and write sides separately based on their respective loads, allowing for better resource utilization and cost optimization.

However, for systems with moderate traffic and simple performance needs, the added complexity of CQRS may not be justified.

Domain Complexity

The complexity of the business domain is a key factor in deciding whether to use CQRS:

- Complex Business Logic: If the domain involves intricate business rules and logic that differ significantly between read and write operations, CQRS can help manage this complexity by separating the concerns.

- Simple CRUD Operations: For systems with straightforward create, read, update, and delete (CRUD) operations, such as a basic blog or to-do list application, CQRS may introduce unnecessary complexity.

It's important to note that CQRS should be applied selectively to specific bounded contexts or subdomains where it provides the most value, rather than enforcing it across the entire system.

Audit and Compliance Requirements

Systems with strict auditing and compliance requirements can benefit from CQRS, particularly when combined with event sourcing:

- Detailed Audit Trails: CQRS with event-sourcing enables capturing all state changes as events, providing a complete audit trail for regulatory compliance and historical analysis.

- Temporal Queries: Event sourcing allows querying the system's state at any point in time, which can be valuable for auditing and debugging purposes.

Operational Complexity Tolerance

Implementing CQRS introduces additional operational complexity, which should be considered based on the team's capabilities and project constraints:

- Skilled Team: If the development team has experience with CQRS and can effectively manage the increased complexity, it can be a viable option for complex domains.

- Limited Resources: For smaller teams or projects with tight deadlines, the learning curve and operational overhead associated with CQRS may not be feasible.

Development Team Capability

The capability and structure of the development team can influence the decision to adopt CQRS:

- Large, Distributed Teams: CQRS can be beneficial for large development teams working on different aspects of the system, as it allows for a clear separation of responsibilities and independent development of the read-and-write models.

- Small, Co-located Teams: For smaller teams working closely together, the added complexity of CQRS may not provide significant benefits and potentially hinder productivity.

Summary

In this article, we’ve taken a detailed look at Command Query Responsibility Segregation (CQRS).

Let’s summarize our learnings in brief:

- CQRS is an architectural pattern that separates the concerns of reading and writing data.

- The core concepts of CQRS include separation of concerns, command model, query model, and event-driven architecture.

- CQRS mainly consists of two functionalities – the write (command) model and the read (query) model.

- Event Sourcing is an architectural pattern that involves storing the state of a system as a sequence of events rather than just the current state. This approach aligns well with CQRS, particularly on the command side.

- CQRS encourages a task-based approach to UI design, which can lead to more intuitive and efficient user interfaces.

- CQRS can be effectively implemented within a microservices architecture, offering several benefits:

- Cloud platforms like Amazon Web Services (AWS) and Microsoft Azure provide a range of services that facilitate the implementation of the Command Query Responsibility Segregation (CQRS) pattern.

- Deciding whether to apply the Command Query Responsibility Segregation (CQRS) pattern to a system requires careful consideration of various factors such as performance, scalability, domain complexity, audit, compliance, operational complexity, and the scalability of the development team.